Uncategorized

3D SAV Final Summary

by Yang Liu on Mar.25, 2011, under Uncategorized

In this class, I mainly focused on the basic technique on the assignment. And for the final, I integrated these techniques with one of my video games made in Processing.

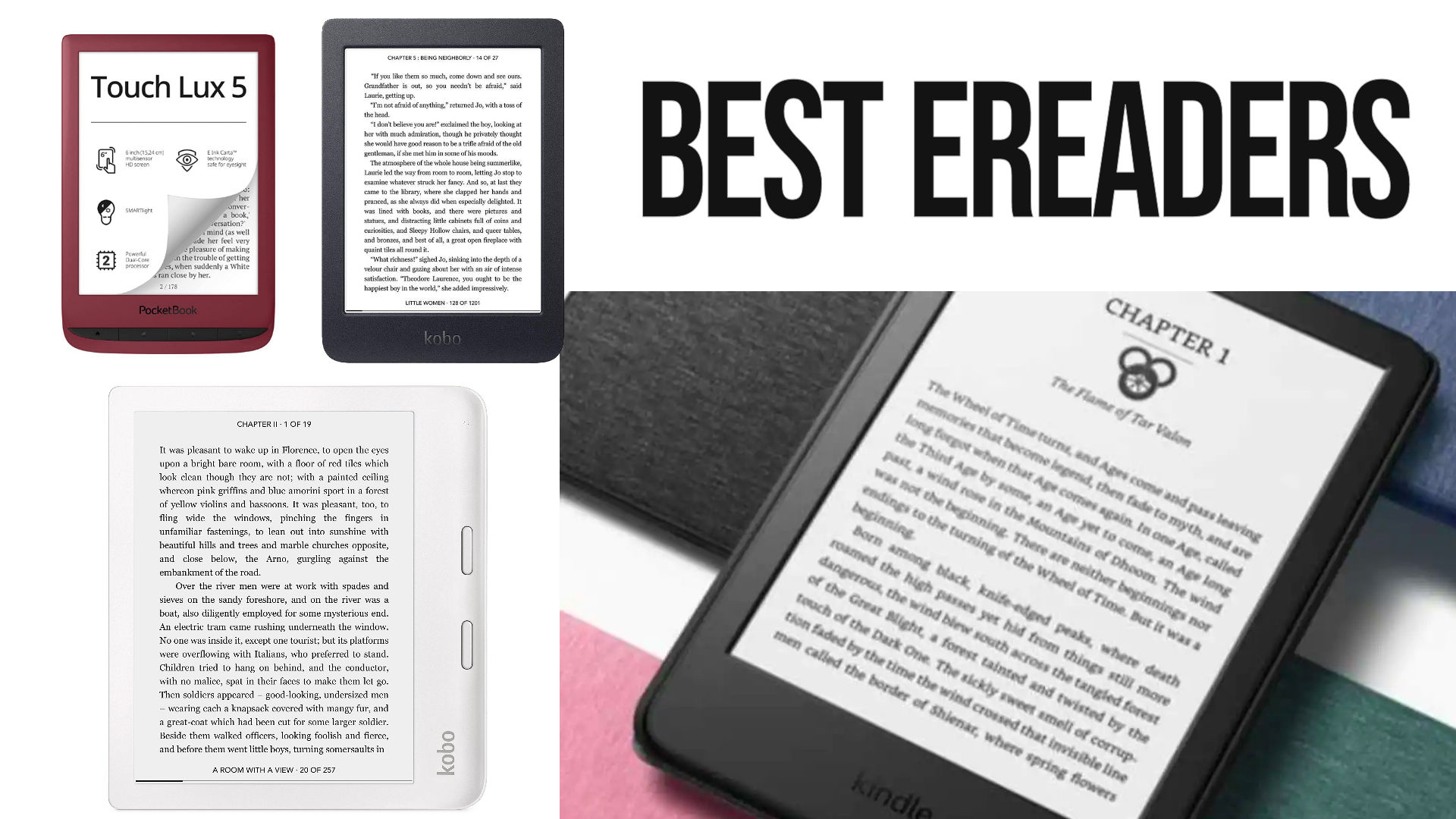

Snowman scan

This is the first assignment, I grouped with Ivana, Ezter, Nik and Diana. The final result is the snowman scanning. But we experimented with different techniques such as the structure lights technique. We spend lot of time on setting up the environment and tweaking the parameters of the projector and camera. But we cannot get a satisfied result. Therefore, we went with the Snowman path. For the code, we export the blob data from the video as an XML file. And in openframeworks, we redraw the vertexes according to the XML file.

Bounding Box in 3D

In this assignment, I implemented the bounding box in 3D space. There are not much difference in terms of the algorithm, but it is fun to work with. Because in 2D word, I can not “feel” the space only be the bounding box. The bounding box just follow where I go. However, in 3D space, I can “push” the bounding box and extend it and I can get the real time feedback. It really help me to feel the 3D space.

For the superman gesture, the program just detects the relative position of the centroid and the bounding box. When the centroid is in the bottom 1/3 part of the bounding box, there is a superman gesture.

The following is the screenshot of the program.

Kinect with interactions

In this assignment, I implemented a very simple interaction into the 3D space. The floating cube can sense the objects insert into it. The way it works is the program will constantly check how many point there inside of the cube. Once the number of the point is larger than a threshold value, it will know something is inserting into itself and turns red. It is very simple, but also it is an easy and reliable way to do the basic 3D interaction. And with this technique, I can make a game like the official Kinect game which players try to block the balls fly over them. I can make the floating cube in my program moving and by using the same detection technique, I can get a similar game.

Kinect Blob

In this assignment, I focus on the visual representation of the 3D data from the kinect. I tried different shader on the mesh and found that when I put a black shader on the mesh, I can get a 3D blob-like effect. Though there is no real blob detection in it. It still looks interesting.

Birds vs. Zombies with Kinect

For the final, I worked on one of my processing game, Birds vs. Zombies. It is a mashup with Angry Birds and Plants vs. Zombies, which uses the Kinect as the controller. Also, it is an online game. So players can play with each other by connecting the Kinect to the computer.

The Game

The game is the traditional Server-Clients structure and they will keep listening to each other. The client will pass the Kinect data to the server. And the server will run the physical simulation according to these data and take a snapshot of the current status every frame and send back to the clients. The clients then redraw the scenes according to the data. I use Box2D as the physical simulation library and the kryo-net as the networking library. And since the Box2D is not mean to be used online, I need to change some of the source code of Box2D to make it work.

The Kinect

The Kinect interaction happens only at the clients. The server doesn’t care about what the controlling mechanism is.

At first, I used the bounding box to detect the hands. It works but not stable. Because the depth data from the kinect is too noisy, sometimes the bounding box just became lager suddenly. And that jumping makes the detection not working.

The I tried the standard deviation way. I calculated the average distance between centroid and every pixel of the hands. And use that distance as the radius of the current gesture. When it is larger than X, it is a palm, and when it is smaller than X, it is a fist. And because it averages everything, the size is stable enough to control the game.

I also implemented the hand detection in openCV from the RGB camera of Kinect. But it will slow down the game dramatically. Maybe there is a way to optimise it , but for now, I took that out.

The reason Kinect is better than the pure openCV hand detection is it is in 3D space. Therefore I can select the hand out by depth information. Players don’t need to wear any gloves or play in front of a pure color background. In these way, any one can pick up the game and play with it at home.

All the code can be found here.

Spacial – Where all your social networking comes together

by Yang Liu on Nov.03, 2010, under Uncategorized

Spacial is a easy and fun way to browse all your social networking information on your iPad.

Background

People love to spend time on mobile SNS

The following are some research from Facebook and Ground Truth.

There are more than 150 million active users currently accessing Facebook through their mobile devices. — Facebook

People that use Facebook on their mobile devices are twice as active on Facebook than non-mobile users. — Facebook

The research shows that people love spending time on mobile devices to browse their social networking contents.

The research shows that people love spending time on mobile devices to browse their social networking contents.

Browsing on iPad is not fun!!

However, right now, browsing on iPad, iPhone or other devise is not fun, either because the sites are not optimized for touch screen or they are just a regular website with bigger UI elements.

Facebook and Twitter have mobile version for their websites, but they just work and have nothing interesting inside. There are just a lot of lists and forms inside.

On the other hand, some third apps delivered a better and more interesting experience for the users, like Flipboard.

It re-organizes all your fackbook and twitter contents in a format of magazine. And it got very popular when it launched this summer. That means people would love to try a new way to browse the information which they can get more fun.

Inspiration

We were inspired by not only the current website, but also some video games.

Mrdoob.com

mrdoob.com is a personal website. The author “mrdoob” has done lot of html5 and flash work which explore the 2d & 3D html5 canvas and some other cutting edge web technologies.

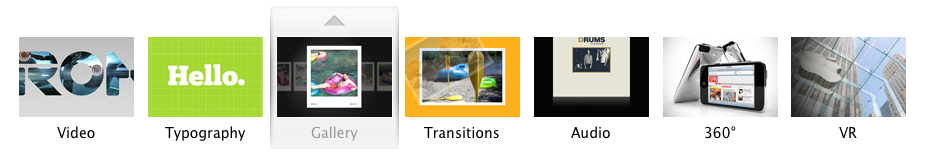

Apple HTML5 Showcase

Apple launched a html5 gallery this summer including the 2D/3D transition effect, webfont, html5 video/audio and VR technology. They are done all by html5.

flOw – The Game

flOw is a multi-platform game which have very unique visual effect and interaction mode when it was launched. The reason I list it here is the feeling of the 3D space in this game is similar with our project, in some level.

Here is the trailer of this game on PS3.

Technologies

We are also inspired by the current technologies like html5, CSS3 transition animation and keyframe animation, PHP image processing and Javascript threading technologies.

What is Spacial?

Though mrdoob and apple have already done lots of amazing html5 stuff, their target is the regular PCs but not touch devises. First, the websites run very slow on the touch devises because they didn’t optimize them for the devices. Second, they are using mouse event to trigger the interaction, which doesn’t take the full advantage of the touch screen.

Therefore, we decide to improve these problem and deliver a better experience to the users.

First, it is 3D

The whole Spacial webpage is in a 3D space like the game flOw. By adding an extra z-axis we can hold more information in a single page. With the better CSS3 support on mobile safari, now we can make 3D transition much easier than before. More over, we can take advantage of the hardware acceleration which means the performance will be much better than traditional JS animiations.

Second, it is “touchable”

Spacial will be optimized for the touch devices, iPad this time, and use touch events instead of mouse event. Therefore, it is a truly “touchable” website living for the touch devices.

Third, it is your social networking hub

In Spacial, you can connect the website with their Facebook account and their Twitter, FourSquare and Youtube account. So, you can view all your feeds, tweets, images and even videos without leaving the website or even the webpage.

Last but not least, it is fun!

Even apple.com itself doesn’t have an iPad optimized version. The mobile version of Google, Facebook or Twitter is not fun at all! But Spacial will introduce a new web browsing experience you have never seen before. Browsing social networking contents will be so interesting that you will never want to look back!

How are we gonna make Spacial real?

Spacial will combine a large number of cutting-edge web technology to make this “impossible” mission be real.

We understand the web tech

We are all web developers and designers. We know about HTML, CSS, PHP, JS very well and we also have the capability to design the pretty and effective interface. We will combine the CSS3 and Javascript to make the 3D space on the webpage. The performance will be much better than the traditional JS method.

We understand the Mobile Safari

Some of our members worked for Apple before. And we know the advantage and the limitation of mobile safari. We will use certain CSS3 techniques to enable the hardware acceleration. And we will put most intense computing work on the server side to reduce the load for Mobile Safari.

We understand the iPad

We know that iPad doesn’t have decent hardware. So, we will optimize our code and media resources as best as we can.

We understand the APIs

In the first step, we will use Facebook API. We can pull many interesting information from it, like your basic information, your images, your wall-comments, the images you are tagged in and even the position of the photo tag. Therefore, besides the innovative interface we can also try to organize all the information in a new way that you can view then easier.

What is the risk?

The biggest risk is the performance. We will try our best to improve it. But if in the end we are still not happy with it. Here is our backup plans.

- Simplify the whole site, take out the unnecessary effects.

- Make it a native app instead of a web app.

- Run it on the iPad simulator.

- Run it on the regular web browser.

We don’t want these happen, but if we have to, we need to make sure our project still will do something that people never did before.

GLART week4

by Yang Liu on Feb.24, 2010, under Uncategorized

Here is the homework of week4. I did some experiment with the light and the material.

here is the java file

here is the texture images, wood, stone and metal.

Use spacebar to switch between different materials.

GLART Week3

by Yang Liu on Feb.17, 2010, under Uncategorized

Very easy program to demonstrate the application of camera.

Use arrow keys to control the camera, and use spacebar to switch between perspective and ortho view.

GLART_week2

by Yang Liu on Feb.03, 2010, under Uncategorized

Ok, this week we deal with the translation and rotation of objects. The basic translation and rotation is not very hard. But I tried to write my own utility class, intending to complete the homework by using the syntax like myquad.translate(). I found it is not a easy job. Because in OpenGL the translation is like the reverse of the real world translation. In OpenGL, I have to move the matrix which is a frame of reference as well I think first. And then, draw the object. However, in my utility class I want to draw the object first and use different functions to manipulate it. I can’t figure it out this week. But I will keep thinking about it.

Here is the files including the unfinished utility class.