Archive for March, 2011

3D SAV Final Summary

by Yang Liu on Mar.25, 2011, under Uncategorized

In this class, I mainly focused on the basic technique on the assignment. And for the final, I integrated these techniques with one of my video games made in Processing.

Snowman scan

This is the first assignment, I grouped with Ivana, Ezter, Nik and Diana. The final result is the snowman scanning. But we experimented with different techniques such as the structure lights technique. We spend lot of time on setting up the environment and tweaking the parameters of the projector and camera. But we cannot get a satisfied result. Therefore, we went with the Snowman path. For the code, we export the blob data from the video as an XML file. And in openframeworks, we redraw the vertexes according to the XML file.

Bounding Box in 3D

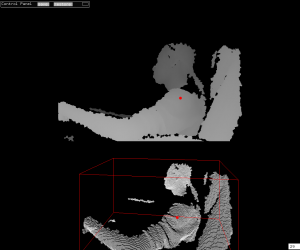

In this assignment, I implemented the bounding box in 3D space. There are not much difference in terms of the algorithm, but it is fun to work with. Because in 2D word, I can not “feel” the space only be the bounding box. The bounding box just follow where I go. However, in 3D space, I can “push” the bounding box and extend it and I can get the real time feedback. It really help me to feel the 3D space.

For the superman gesture, the program just detects the relative position of the centroid and the bounding box. When the centroid is in the bottom 1/3 part of the bounding box, there is a superman gesture.

The following is the screenshot of the program.

Kinect with interactions

In this assignment, I implemented a very simple interaction into the 3D space. The floating cube can sense the objects insert into it. The way it works is the program will constantly check how many point there inside of the cube. Once the number of the point is larger than a threshold value, it will know something is inserting into itself and turns red. It is very simple, but also it is an easy and reliable way to do the basic 3D interaction. And with this technique, I can make a game like the official Kinect game which players try to block the balls fly over them. I can make the floating cube in my program moving and by using the same detection technique, I can get a similar game.

Kinect Blob

In this assignment, I focus on the visual representation of the 3D data from the kinect. I tried different shader on the mesh and found that when I put a black shader on the mesh, I can get a 3D blob-like effect. Though there is no real blob detection in it. It still looks interesting.

Birds vs. Zombies with Kinect

For the final, I worked on one of my processing game, Birds vs. Zombies. It is a mashup with Angry Birds and Plants vs. Zombies, which uses the Kinect as the controller. Also, it is an online game. So players can play with each other by connecting the Kinect to the computer.

The Game

The game is the traditional Server-Clients structure and they will keep listening to each other. The client will pass the Kinect data to the server. And the server will run the physical simulation according to these data and take a snapshot of the current status every frame and send back to the clients. The clients then redraw the scenes according to the data. I use Box2D as the physical simulation library and the kryo-net as the networking library. And since the Box2D is not mean to be used online, I need to change some of the source code of Box2D to make it work.

The Kinect

The Kinect interaction happens only at the clients. The server doesn’t care about what the controlling mechanism is.

At first, I used the bounding box to detect the hands. It works but not stable. Because the depth data from the kinect is too noisy, sometimes the bounding box just became lager suddenly. And that jumping makes the detection not working.

The I tried the standard deviation way. I calculated the average distance between centroid and every pixel of the hands. And use that distance as the radius of the current gesture. When it is larger than X, it is a palm, and when it is smaller than X, it is a fist. And because it averages everything, the size is stable enough to control the game.

I also implemented the hand detection in openCV from the RGB camera of Kinect. But it will slow down the game dramatically. Maybe there is a way to optimise it , but for now, I took that out.

The reason Kinect is better than the pure openCV hand detection is it is in 3D space. Therefore I can select the hand out by depth information. Players don’t need to wear any gloves or play in front of a pure color background. In these way, any one can pick up the game and play with it at home.

All the code can be found here.